This review has been printed in the March 2020 issue of Chess Life. A penultimate (and unedited) version of the review is reproduced here. Minor differences exist between this and the printed version. My thanks to the good folks at Chess Life for allowing me to do so.

Kaufman, Larry. Kaufman’s New Repertoire for Black and White: A Complete, Sound and User-friendly Chess Opening Repertoire. Alkmaar: New in Chess, 2019. ISBN 9789056918620. PB 464pp.

Kaufman’s New Repertoire for Black and White: A Complete, Sound and User-Friendly Chess Opening Repertoire is the third incarnation of Larry Kaufman’s one-volume opening repertoire. While the first two – The Chess Advantage in Black and White: Opening Moves of the Grandmasters (2004) and The Kaufman Repertoire for Black and White: A Complete, Sound and User-Friendly Chess Opening Repertoire (2012) – were well-regarded, this new edition appears at something of an inflection point in the history of chess theory.

Opening theory has exploded over the past two decades, due largely to the influence of engines and databases. As one of the developers of Rybka and Komodo, among other important projects, Kaufman has made good use of engines in his writing, and both previous versions of this project proclaim the central role played by the computer. In 2004 it was Fritz, Junior, and Hiarcs, and in 2012 he used Houdini and especially Komodo.

Today the landscape has changed. The rise of artificial intelligence and neural network engines, first Alpha Zero and now Leela Chess Zero, is reshaping opening theory. In Mind Master, reviewed here last month, Viswanathan Anand relates that Caruana and Carlsen were the first elite players to make use of Leela in their 2018 match preparations, and that his trainer introduced it into their workflow at the end of that year. Chess authors have picked up on the trend, and works written under Leela’s influence are beginning to appear.

Kaufman’s New Repertoire is advertised as “the first opening book that is primarily based on Monte Carlo search.” This is somewhat imprecise – Leela’s evaluations come from the neural network, not game rollouts – but the point remains that Kaufman has chosen to make use of the newest technologies in writing his book. He relied on Leela and a special “Monte Carlo” version of Komodo to craft the repertoire, generally deferring to Leela’s view while reserving the right to serve as “referee” if the engines disagree.

So what does Kaufman’s new repertoire look like? As the title suggests, the book contains a complete opening solution for both colors, focusing on 1. e4 for White, and the Grunfeld and Ruy Lopez for Black. Kaufman is covering a lot of ground here, generally offering two systems or ideas against most major continuations. In the mainline Ruy he offers readers three choices with Black: the Breyer, the Marshall, and the Møller.

The virtue of this approach is clear. Kaufman’s New Repertoire gives readers a one-stop opening repertoire, featuring professional lines, particularly with Black, and computer-tested ideas that can inspire confidence. But in an age where multi-volume single color repertoires are increasingly the norm, is it possible to include enough detail in less than 500 pages?

Let’s dive a bit deeper and take a look at specific recommendations.

White: 1. e4

- vs Caro-Kann – (a) 4. Bd3 Exchange Variation, (b) 3. Nc3 dxe4 4. Nxe4 Bf5 5. Qf3!?, (c) Two Knights.

- vs French – Tarrasch Variation.

- vs 1. … e5 – (a) Italian Game, with multiple repertoire choices offered, (b) Ruy Lopez with 6. d3, and 5. Re1 against Berlin.

- vs Sicilian – (a) 2. Nc3 ideas, including 2. Nc3 d6 3. d4 cxd4 4. Qxd4 Nc6 5. Qd2 and the anti-Sveshnikov 2. Nc3 Nc6 3. Nf3 e5 4. Bc4; (b) 2. Nf3 and 3. Bb5 against 2. … d6 and 2. … Nc6, and 3. c3 against 2. … e6, entering the Alapin.

Black: 1. e4 e5 and Grunfeld

- … Nf6 against the Scotch.

- … Bd6 against the Scotch Four Knights.

- … Bc5 in the Italian game, focusing on 4. d3 Nf6 5. 0-0 0-0 and now 6. c3 d5, 6. Re1 Ng4, 6. a4 h6 followed by … a5, and 6. Nbd2 d6.

- the Breyer is the “best all-purpose defense” in the 9. h3 Ruy Lopez, but Kaufman also includes Leela’s favored Marshall Attack and the Møller, inspired by Anand.

- Neo-Grunfeld without … c6 vs the Fianchetto.

- f3 Nc6.

- …a6 against the Russian System.

- … Qxa2 and 12. … b6 against 7. Nf3 in the Exchange variation.

- three options – 10. … Qc7 11. Rc1 b6, 10. … e6, and 10. … b6 – against the 7. Bc4 Exchange.

- c4 / 1. Nf3 – Anti-Grunfeld, Symmetrical English, and a tricky path into the Queen’s Indian Defense for transpositional reasons.

While chapter introductions explain his reasons for individual repertoire choices, Kaufman’s analysis revolves mostly around concrete lines, using commented games as his vehicle. He tends to propose variations that avoid the heaviest theory with White, while turning to two of the most professional of openings – the Breyer and Grunfeld – as the backbones of his Black repertoire.

In the Introduction Kaufman warns his readers that he omits “rare” responses from the opponent to save space and offer alternative ideas. This means that the book is unlikely to be refuted, but readers will have to do some extra work to flesh out their repertoires.

The analysis in Kaufman’s New Repertoire is heavily influenced by the computer, and individual lines are usually punctuated with numerical evaluations from Komodo. This is not to say that the book is perfect. Attributions of novelty status are sometimes incorrect, although that may have more to do with differing data sets than anything else. More worrisome are the analytical errors and omissions. Two examples:

(a) Kaufman recommends 8. Qf3 in the Two Knights, and after 1.e4 e5 2.Nf3 Nc6 3.Bc4 Nf6 4.Ng5 d5 5.exd5 Na5 6.Bb5+ c6 7.dxc6 bxc6 8. Qf3 he analyzes the two traditional mainlines of 8. … Be7 and 8. … Rb8. Checking his work, I discovered that neural net engines thinks sacrificing the exchange with 8. … cxb5 is fully playable, giving Black good compensation after 9. Qxa8 Be7 (Leela) or 9. … Qc7 (Fat Fritz). See the recent game Chandra-Theodoru from the SPICE Cup in 2019 for an example of the latter.

Jan Gustafsson made an analogous, and equally Leela inspired, discovery in his new (and outstanding) Lifetime Repertoire: 1. e4 e5 series for Chess24, analyzing 8. … h6 9. Ne4 cxb5 10. Nxf6+ gxf6 11. Qxa8 Qd7! where the best White can do is head for a perpetual.

While the idea of giving the exchange is considered inferior by theory, the fact that Leela approves it should have been just the kind of discovery that Kaufman would trumpet here. Perhaps he didn’t believe what he was seeing, although it should be noted that Komodo verifies Black’s compensation.

(b) After 1.e4 e5 2.Nf3 Nc6 3.Bc4 Bc5 4.c3 Nf6 5.d4 exd4 6.e5!? d5 7.Bb5 Ne4 8.cxd4 Bb6 9.Nc3 0–0 10.Be3 Bg4 11.h3 Bh5 12.Qc2 we reach a “rather critical” position.

Here Kaufman discusses five moves: 12. … Bg6, 12. … Bxf3, 12. … Nxc3, 12. … Rb8, and 12. … Ba5!, which “may be Black’s only path to roughly equal chances.” (96)

I found two problems with the analysis, both involving Kaufman glossing over a poor move towards the end of a line, allowing him to claim an advantage for the side he is championing. After 12. … Bxf3, 18. … Nf5 is dubious; better is 18. … Ng6 as in Vocaturo-Moradiabadi, Sitges 2019. His analysis of 11. Qc2 is also flawed – check the pgn at uschess.org for more details. And these were not the only “tail-errors” I found in my study.

I’m torn on how to assess these analytical lapses. On the whole the book is well-researched and up to date, and the broad outlines of all Kaufman’s repertoire choices seem sound. So why are there these small problems, especially when the entire conceit of the book is its being computer-proofed, and with so many of the lines cribbed verbatim from the engine? I don’t have an answer to this, but I do wonder if Kaufman doesn’t suffer from a bit of confirmation bias.

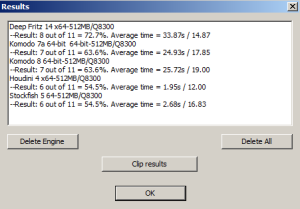

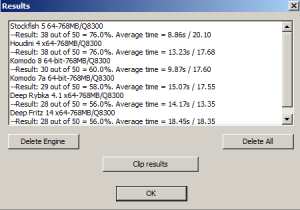

As one of the co-authors of Komodo, Kaufman surely trusts the engine a great deal, but the version used here – Komodo MCTS – is markedly inferior to traditional Komodo or Stockfish, and is rated some 200 points lower on most testing lists. Komodo MCTS has the advantage of being able to analyze multiple lines at once without a performance hit, but its (very relative) tactical shallowness can be a concern. Because Leela suffers from similar issues, it may have been smarter to pair it with traditional Komodo instead.

Kaufman’s New Repertoire for Black and White is a solid repertoire offering despite these problems. His recommendations are well-conceived, and I was impressed with how much Kaufman was able to stuff into these pages. There’s not a lot of conceptual hand-holding here, so readers will have to be strong enough – say 2000 and above – to get maximum value from the book, and many lines will require supplemental study and analysis for the sake of completeness. Still, for those looking for a one-stop repertoire, particularly from the Black side, Kaufman’s book might be just what the doctor ordered.